June 4, 2024

TLDR

Backfilling integrates historical data into data pipelines, ensuring completeness and mitigating failures. Mage provides a no-code UI and custom coding options to streamline backfilling for robust, resilient data pipelines.

Outline

Introduction to backfilling

Encountering problems

Mitigation strategies

Apply backfills in Mage

No code UI in Mage

Conclusion

Introduction

Backfilling is a critical step in building a data pipeline. It completes the data pipeline process by integrating historical data with more recent data, and backfilling ensures the integrity of the data pipeline, acting as contingency pipeline in case of failures in production.

The primary use case for backfilling is building resiliency into your production pipelines. Data pipelines fail or experience data loss for a various number of reasons. It’s essential that every production pipeline has a back-up plan. Backfills can be deployed to recover the missing data building resiliency into your pipeline.

A secondary use case for backfilling data is to integrate historical data into your analytics pipeline. If your data pipeline was not set up to to handle a history load on the initial run, setting up a backfill to load historical data is necessary.

Many batch data pipelines ingest data into their storage locations from a date range and move forward. For instance, if your pipeline went into production today and it was a daily batch the pipeline would process data with an updated-on column today’s date. Backfilling allows you to integrate historical data based on your timeframe requirements while still integrating future daily batches.

Encountering problems

Source: giphy.com

Backfilling data presents a unique set of problems during the extract, transform, and load (ETL) process of your data pipelines. While there may be other challenges, three main problems should concern data engineers during the backfilling process:

Maintaining idempotency - Backfills can cause idempotency issues for a variety of reasons including: duplicating data insertion, re-running backfills due to incomplete runs, and data inconsistencies across different systems.

Ensuring data type integrity - Schema changes happen and can cause backfill pipelines to fail. These usually are changes made to data types throughout the lifespan of a system. For instance if a column in the database was originally defined as an integer, but later changed to a string the backfill pipeline may fail.

Handling large volumes of data - Backfilling large volumes of data can consume significant CPU, memory, and disk resources leading to slow performance and possible failure of the data pipeline

Fortunately, there are strategies to mitigate many of these problems above.

Mitigation strategies

Source: giphy.com

Backfilling data is critical to ensuring that data systems are complete and accurate. By following best practices data engineers can help mitigate common challenges and maintain data integrity throughout the backfill process. Below are some strategies to to help you implement a successful backfill.

Use and test unique keys - Ensure each record has a unique identifier and implement logic in data pipeline exporters that ensures uniqueness. Also include data validation tests to confirm your records are unique.

Validate and transform data - Data type mismatches should be identified during the pre-backfill analysis of your source system. Any attributes that have differing data types in the backfill will need to be transformed prior to inserting the records into the target database.

Batch process or incrementally load data - Extracting or loading data in batches will reduce strain on the source and target systems. Data Engineers should also consider backfilling large datasets should be completed on off peak hours to reduce downtime of the system or application.

Once you understand the best practices for implementing a successful data backfill, it’s time to put those learnings to work.

Apply backfills in Mage

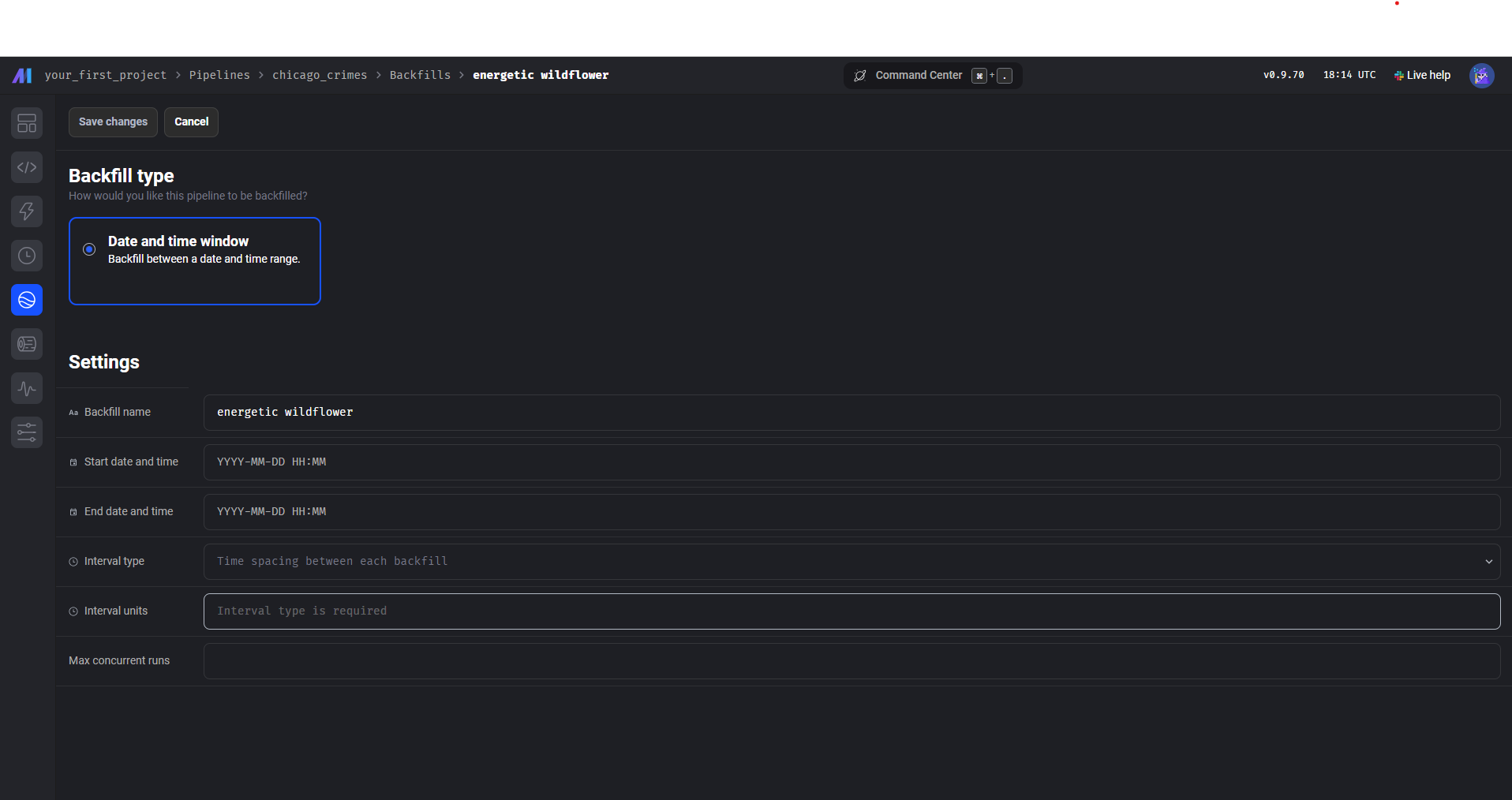

Mage offers two strategies to handle backfilling data. First, a no code UI allowing data engineers to generate backfills based on a date and time window. The second option is to custom code a backfill to meet the requirements of you data pipeline. For this tutorial we will focus on implementing a backfill using the no code UI.

No code UI in Mage

Once users select into a pipeline the backfill UI is located on the left sidekick. The data and time window backfill method should be used when you have some simple backfills based on dates. A simple use case for the Date and Time Window UI is a system failure where data does not move from a source system to the target system for a period of time. This would especially be true for daily batch pipelines.

Backfill Name - Mage provides you with a name or an opportunity to change the name of your backfill.

The start and end date times - are the range you want to extract data from your source to backfill your target system.

Interval Types - are the intervals selected for the date range such as hourly, daily, weekly, monthly etc.

Interval Units - are time units used to segment the interval types (i.e. 28 day batch with interval type daily segmented by 7 intervals will result in 4 backfill runs).

Max Concurrent Runs - is the max number of pipeline runs you will allow to complete simultaneously.

To generate a backfill using the Date and Time Window UI complete the following steps:

Select backfill button from the pipelines left vertical sidekick

Click the Create New Backfill button navigating the user to the screen image above

You can either keep the backfill name provided or change the name to whatever you want to call the backfill

Enter the custom start date and time

Enter the custom end date and time

Enter an interval range such as day, week, month, year, etc.

Enter number of interval units

Click Save changes

Once the backfill is saved it is stored in the triggers and ready to run

Click Start backfill to complete the process.

Conclusion

Implementing backfills is essential for maintaining the integrity and completeness of your data pipelines. By leveraging Mage's powerful backfilling capabilities, you can seamlessly integrate historical data and build resilience into your data workflows. Whether using the intuitive no-code UI for simple date and time window backfills or custom coding for more complex requirements, Mage provides the flexibility and tools you need to ensure your data pipelines remain robust and reliable. Start applying these best practices and strategies today to enhance your data pipeline's performance and reliability.